Elon Musk is at it again - warning about the increasing dangers from artificial intelligence (AI). But this time, he’s ringing the bell longer and hitting it harder. Clearly, he is becoming more concerned about this technology. But what could possibly be so worrisome about a technology that makes products faster, cheaper, and lowers overall costs? Plenty, as he tells it.

One of those worries is the AI experts themselves. In his view, they think they are smarter than they actually are, and this could set the stage for a variety of serious problems.

“They define themselves by their intelligence and they don’t like the idea that a machine can be way smarter than they are so they discount the idea,” he said. “But I’m very close to the cutting edge in AI and it scares me. It’s capable of doing vastly more than most people realize and the rate of improvement is exponential.”

Numbers Tell The Story

Growth in numbers can be measured in various ways. One is arithmetically. This means a constant number or amount is added regularly. Take as an example a child who puts a dollar into a piggy bank every week. The amount being added remains the same - it’s always one dollar. So the total number of dollars increases steadily, going from 1 in the first week to 2, 3, 4, and 5 in the following weeks.

Now suppose the child adds more than one dollar a week - enough to make the total increase exponentially. In this case, the total number of dollars will increase much faster; the example of a child saving dollars every week demonstrates this very clearly. In the first week the child has one dollar. But this number increases to 2 in the second week, then to 4 the week after, and then to 8, 16, 32 and so on in the following weeks.

Improvements in AI technology are unfolding exponentially, Musk warns - and could soon reach a point where its progress is becoming more difficult to predict - and to control.

Fun And Games

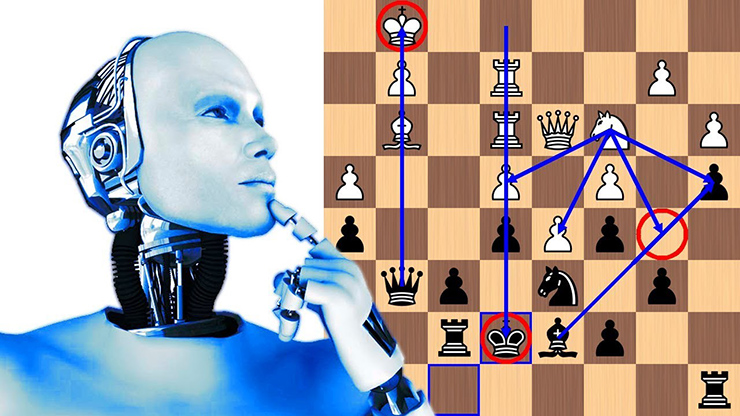

AlphaGo is an AI program developed by Google Deep Mind that specializes in the game Go, a Chinese strategy board game. Experts say that Go is far more complicated than chess. AlphaGo was developed to compete against humans.

At first AlphaGo had just modest capabilities, and was unable to beat a skilled Go player. But in the space of less than nine months, it improved to the point of being able to beat the European champion (who was then ranked the 600th best player in the world), and then to beating Lee Sedol, who had been a world champion for many years.

AlphaGo subsequently beat the current world champion. And then it beat all opponents - while playing them simultaneously.

But the advances in this technology have not stopped there. An improved version of AlphaGo called AlphaZero was developed, and it absolutely crushed AlphaGo. No one expected that much improvement and certainly not so quickly. Incidentally, AlphaGo learned the game by playing itself, and is capable of learning the rules of any game it is given. The rate of improvement is both obvious and dramatic - and AlphaGo’s ability to learn extends to areas far beyond games. Musk is worried that this technology will be weaponized.

“AI is the single biggest existential crisis that we face and the most pressing one,” he says. “I’m not normally an advocate of regulation and oversight... but this is a case where there is a very serious danger to the public and therefore there needs to be an authoritative body that has insight and oversight to confirm that everyone is developing AI safely.

“This is extremely important because the danger of AI is much more (palpable) than nuclear warheads by a wide margin and nobody would suggest that we just allow anyone to build nuclear warheads if they want to without regulating it. That would be insane. And mark my words: AI is far more dangerous than nukes.”

Musk says that the short-term concerns about AI are that it will result in dislocation and lost jobs - important issues to be sure, particularly to those who will be displaced. But the longer-term dangers of digital super intelligence are far worse. “If humanity collectively decides that creating digital super intelligence is the right move then we should proceed very carefully.”

Musk has been rated the richest person in the world. As the head of Tesla, SpaceX, Neuralink, and other cutting-edge companies, he is more than familiar with the latest developments in high tech so his opinion is certainly a very informed one. And he believes that as a result of AI getting out of control the world will experience another Dark Ages.

“My guess is there probably will be one at some point. I’m not predicting that we’re about to enter a Dark Ages, but there’s some probability that we will, particularly if there’s a third World War.”

So what’s the solution? Musk thinks that “a seed of civilization” needs to survive somewhere so that in the event of a global catastrophe it could help bring the world back to normal and shorten the length of the Dark Ages if one develops.

“It’s unlikely that there will never be another World War and that’s why it’s important to get a self-sustaining base on Mars. It’s far enough that if there is a war on Earth a Mars base would be likely to survive. It would be really important to get this done before a possible World War III.”

Musk is not the only tech guru who is worried about AI. Dr. Michio Kaku, an astrophysicist who comments about all things science, was asked for his thoughts on this subject. “It will make life more convenient, things will be cheaper, it will open new vistas and new industries will be created. I think AI will be bigger than the auto industry,” he said. “But let’s not be naive. There is a tipping point where AI could be dangerous and become an existential threat.”

Bill Gates agrees. “I do think we have to worry about this. It’s not inherent that AI will always have the same goals in mind that we do.”

The late British physicist Stephen Hawking said, “The development of full artificial intelligence could spell the end of the human race. It would take off on its own...and humans couldn’t compete.”

Physicist Max Tegmark said, “Once you get to the point where machines are better at building AI than we are, future AI could be built not by engineers but by machines, except they might do it thousands or even millions of times faster than humans can.”

And there are more immediate dangers. AI makes it possible to now analyze and track an individual’s every move online, as well as what he or she does from day to day. Cameras are nearly everywhere and facial recognition algorithms can recognize anyone.

AI tracks people’s activities, down to how they spend their time - even what video games they play and if they jaywalk. In the wrong hands all of this information can be used for social oppression and discrimination. There can also be a misalignment between the ordinary goals of humans and machines. For example, a driver may give a self-driving car a simple command to go to the airport as fast as possible, but in the process of doing so the car will leave behind a trail of accidents and havoc.

AI is already being used in virtually every industry, but as the technology progresses safeguards need to be installed to ensure that it be used for the benefit of people and not for any sinister purposes or by unscrupulous individuals. What are the chances that it will be used properly?

Sources: www.whatis.techtarget.com; www.unescwa.org; YouTube Videos: Elon Musk: My Final Warning; Increasing Dangers From AI; Is AI A Special-level Threat to Humanity?; Is Artificial Intelligence Dangerous?; Ten Risks Associated With Artificial Intelligence; What Are The Potential Risks Of AI

Gerald Harris is a financial and feature writer. Gerald can be reached at This email address is being protected from spambots. You need JavaScript enabled to view it.